Monitoring and Evaluation in E-Learning: Five M&E Practices to Measure and Boost the Impact of Online Education Programs

E-learning programs have surged in popularity during the COVID-19 crisis, as educational institutions, businesses, non-profits and other organizations have sought safer, socially distanced options for their classes and training courses. Some of these programs are fully online, while others take a hybrid approach that combines in-person and online components. Their formats range from training delivered on mobile apps or e-learning platforms to virtual exchange, an online education practice that allows for international collaborative learning through sustained, technology-enabled interactions that let students across regions engage with one another in real-time.

As we move (hopefully) into a post-pandemic world, these approaches are likely to continue to become more prevalent, due to the multiple benefits they offer both students and providers — and to their applicability to everything from traditional academic programs to skills development initiatives. They’re being used in countries around the world to increase equity of access to high-quality vocational and entrepreneurship training, and they offer a sustainable solution to the challenge of upskilling at scale.

Virtual exchange is an area of particular interest within the broader online education space, as global development-focused organizations are using it in a number of innovative ways. For instance, the William Davidson Institute (WDI) at the University of Michigan — an independent, non-profit research and educational organization — is utilizing the approach in its Business and Culture program. WDI, which serves both profit-seeking and non-profit firms by creatively applying business skills in low- and middle-income countries, has decades of experience when it comes to in-person education and training. In recent years, it has expanded its virtual learning offerings, responding to a trend that has only grown in importance during the pandemic.

The Business and Culture program connects undergraduate students in Egypt, Lebanon, Libya and the U.S. (at the University of Michigan), enabling them to develop connections and critical international business competencies through synchronous virtual sessions and a cross-cultural team-based final project. Soliya, an international non-profit and pioneering virtual exchange provider, takes a similar approach. Combining the power of interactive technology with the science of dialogue, its Connect Program fosters interdisciplinary cross-cultural exchanges and provides critical thinking, communication and digital media literacy skills to post-secondary students in over 200 colleges and universities in 35 countries in North America, Europe, the Middle East and North Africa (MENA) region, and South and Southeast Asia. (Both programs are supported by the Stevens Initiative, which is sponsored by the U.S. Department of State, with funding provided by the U.S. Government, and is administered by the Aspen Institute.)

To support organizations and programs that are employing virtual exchange, we have compiled the following five key monitoring and evaluation (M&E) insights. Based on WDI and Soliya’s experience, these go beyond commonly used training evaluation frameworks such as the Kirkpatrick Four Levels and training effectiveness questions, and can be applied to a variety of e-learning programs.

Insight 1: Design your M&E plan to measure impact and enable adaptive management.

WDI’s performance measurement team consciously designs M&E strategies to measure impact and support efforts to strengthen programs in real-time. In other words, its M&E plan enables adaptive management — a term USAID defines as “an intentional approach to making decisions and adjustments in response to new information and changes in context.” For example, students in the first semester of the Business and Culture program shared a request for more engagement with their group members and peers. Hence, in the following semester, WDI placed students in their cross-cultural project groups at the start of the semester instead of toward the middle, when the final project was assigned. Additionally, the program used technology solutions like Padlet and Zoom breakout rooms to facilitate interactions outside their project groups. (WDI published a video with instructions to design an M&E strategy that measures impact and enables adaptive management here.)

Similarly, over its last 18 years of programming, Soliya has included a variety of process and content-related questions in its surveys. It has used these responses — as well as feedback collected through qualitative research — to adapt and further refine its program design.

Insight 2: Implement deliberate strategies to maximize your survey completion rates

WDI and Soliya use an electronic pre- and post-program assessment survey to collect data from students. This forms the cornerstone of both our M&E strategies, allowing us to measure impact and enable real-time, evidence-based decision-making for adaptive management. Ensuring students complete the surveys is always a challenge, especially during a pandemic when they may face digital fatigue. Yet achieving a higher response is essential, and we strongly recommend not relying on luck.

To achieve an 85% response rate as requested by our mutual funder, here are the key strategies WDI and Soliya have implemented:

To achieve an 85% response rate as requested by our mutual funder, here are the key strategies WDI and Soliya have implemented:

- Design short surveys that students can complete within 5 -10 minutes.

- Automatically redirect students to the pre-program survey as part of the mandatory program registration process, essentially minimizing communication and requested actions.

- Reduce the burden on students by holding time in class to complete the surveys.

- Send students reminders to complete the surveys. We found that 30% of enrolled students across the U.S. and MENA regions completed the survey after receiving additional reminders (based on WDI’s sample of 255 completed pre- and post-program assessment surveys in the winter/spring 2021 semester).

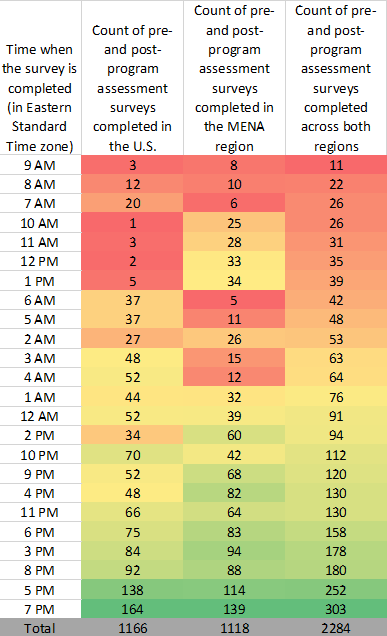

- Consider sending out the reminder in the evening period of the time zone that students live in. For example, in the winter/spring 2021 semester, 39% of Soliya’s sample of 2,284 students in the U.S. and MENA region completed the pre- and post-program assessment survey between 5:00 and 8:00 PM EST (all sessions took place Monday – Thursday between 7:00 AM and 2:00 PM EST).

- Consider making the completion of the post-program survey a required step for receiving a program certificate. (This may not always be feasible: For example, for most of Soliya’s students, the dialogue exchange is an integrated component of their academic courses; as such, receiving full credit for completing the virtual exchange program could not be tied to survey completion.)

Insight 3: Include an “attention check” question in the survey to maintain data integrity and build confidence in your collected data

To ensure students are reading the questions before responding (as opposed to randomly selecting responses on multi-item scale questions), Soliya implements attention checks for both the enrolled and comparison group students. This consists of a simple question similar to “This is a test question. Please choose ‘1’ as the response for this question.” Soliya discards the entire responses of students who do not correctly answer the attention check question. Through a small community of practice organized by the Stevens Initiative and its independent evaluation partner, RTI International, WDI learned about this tactic and now includes the attention check question as well.

Insight 4: Use a combination of strategies to recruit students for a comparison group

Both Soliya and WDI are conducting quasi-experimental studies to attribute impacts on enrolled students to the program (the results are forthcoming in summer 2021 and 2022, respectively). However, recruiting a representative comparison group has proven challenging in a virtual environment. We recommend using a multi-pronged strategy, as implemented by both our organizations, to meet sample size requirements.

One way to do this is to recruit the comparison group students through existing institutional partners. For instance, Soliya’s Connect Program obtains a well-matched comparison group by recruiting students enrolled in similar classes taught by the same faculty members as those in the Connect program. And for its Business and Culture program, WDI advertises in student listservs. It also asks enrolled students and the instructors’ teaching assistants to invite undergrad friends at their university who have not taken the class to participate in the study.

Like Soliya, WDI also incentivizes comparison group students through a raffle, as these students tend to be less motivated to complete the survey. The raffle includes 10-22 prizes for participants who complete the surveys on time and pass the attention check question. The eligibility criteria are communicated to students in advance.

Insight 5: Foster buy-in among partners for M&E activities to gain their support and accountability

It is critical to explain the goal and value proposition of measurement activities to all partners, including the participating instructors and enrolled students, to secure their buy-in for M&E activities. For example, both Soliya and WDI include these explanations in the informed consent form given to enrolled and comparison group students before they decide to participate in the study. In addition, WDI makes announcements in class before administering the survey, highlighting student privacy and confidentiality — see the script it used here.

Soliya conducts extensive conversations with participating faculty about the purpose of the research at the start of the semester. In general, faculty find great value in such research, as the findings add to their understanding of the impact of integrating virtual exchange within their courses. Furthermore, engaging in these conversations early on improves transparency and accountability with partners.

Both WDI and Soliya also conduct “pause and reflect” sessions with instructors post-semester, to discuss survey findings and any relevant adaptations to the program. This way, instructors receive immediate value from the M&E research, as it enables them to fine-tune their assignments and pre-readings, include more time for Q&As with students during class, and take other steps to make their next course more effective. In return, faculty buy-in ensures their support of our data collection efforts, which they encourage by agreeing to send reminders, allowing us to engage with their teaching assistants, and allowing time during class to conduct these activities. And finally, both WDI and Soliya have received Institutional Review Board approvals to demonstrate that the research is conducted ethically, which improves trust among all.

To explore the insights discussed above in more detail, or to learn about other innovative M&A approaches and online/hybrid educational programmatic best practices, feel free to contact the authors (yaquta@umich.edu and salma@soliya.net).

Yaquta Kanchwala Fatehi is a program manager for the Performance Measurement Initiative of the William Davidson Institute at the University of Michigan, and Salma Elbeblawi is a Chief Program Officer at Soliya. The authors would like to thank Meghan Neuhaus for her review.

Editor’s note: WDI is NextBillion’s parent organization.

Photo caption: A University of Michigan class employing virtual exchange (pre-pandemic). Photo courtesy of the William Davidson Institute.

- Categories

- Education, Impact Assessment, Technology